1. Experimenting with Different Models

과대적합(Overfitting)은 모델의 복잡성이 증가하여 훈련데이터에 대해서는 높은 정확도와 예측력을 가지고 있지만, 평가 데이터의 예측력은 떨어지는 현상이다. 과소적합(Underfitting)의 경우 모델이 패턴과 잘못된 파라미터로 인해 정확도와 예측도가 떨어지는 현상을 의미한다.

2. Codes

from sklearn.metrics import mean_absolute_error

from sklearn.tree import DecisionTreeRegressor

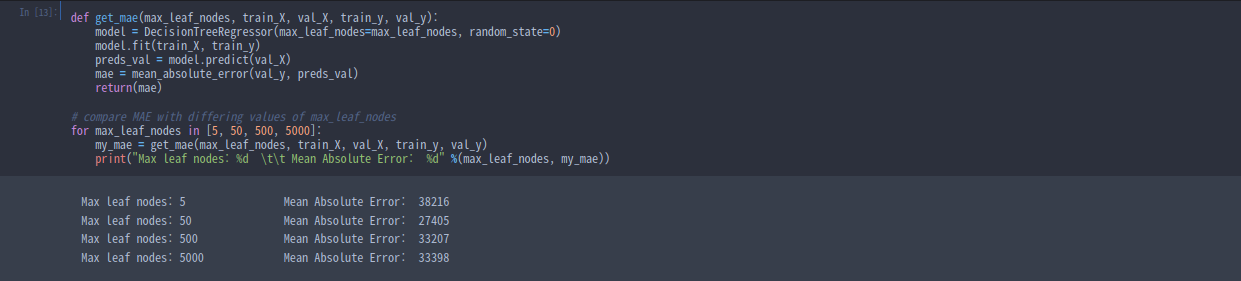

def get_mae(max_leaf_nodes, train_X, val_X, train_y, val_y):

model = DecisionTreeRegressor(max_leaf_nodes=max_leaf_nodes, random_state=0)

model.fit(train_X, train_y)

preds_val = model.predict(val_X)

mae = mean_absolute_error(val_y, preds_val)

return(mae)

# compare MAE with differing values of max_leaf_nodes

for max_leaf_nodes in [5, 50, 500, 5000]:

my_mae = get_mae(max_leaf_nodes, train_X, val_X, train_y, val_y)

print("Max leaf nodes: %d \t\t Mean Absolute Error: %d" %(max_leaf_nodes, my_mae))

"""

result will be same as below :

Max leaf nodes: 5 Mean Absolute Error: 347380

Max leaf nodes: 50 Mean Absolute Error: 258171

Max leaf nodes: 500 Mean Absolute Error: 243495

Max leaf nodes: 5000 Mean Absolute Error: 254983

"""

Conclusion

- Overfitting : Capturing spurious pattern that won't recur in the future, leading to less accurate predictions

- Underfitting : Failing to capture relevant patterns, again leading to less accurate predictions

3. Exercise : Underfitting and Overfitting

Step 1 : Compare Different Tree Sizes

candidate_max_leaf_nodes = [5, 25, 50, 100, 250, 500]

# Write loop to find the ideal tree size from candidate_max_leaf_nodes

for max_leaf in candidate_max_leaf_nodes :

print(get_mae(max_leaf, train_X, val_X, train_y, val_y))

# Store the best value of max_leaf_nodes (it will be either 5, 25, 50, 100, 250 or 500)

best_tree_size = 100Step 2 : Fit Model Using All Data

# Fill in argument to make optimal size and uncomment

final_model = DecisionTreeRegressor(random_state = 1, max_leaf_nodes = 100)

# fit the final model and uncomment the next two lines

final_model.fit(X, y)Source of the course : Kaggle Course _ Underfitting and Overfitting

'Course > [Kaggle] Data Science' 카테고리의 다른 글

| [ML] Missing Values (0) | 2022.02.19 |

|---|---|

| [ML] Random Forests (0) | 2022.02.14 |

| [ML] Model Validation (0) | 2022.02.14 |

| [ML] Your First Machine Learning Model (0) | 2022.02.14 |

| [ML]Basic Data Exploration (0) | 2022.02.14 |

![[ML] Underfitting and Overfitting](https://img1.daumcdn.net/thumb/R750x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FPdkCV%2FbtrvobZVO4V%2FiDGYZyMycLB2CkSewakrek%2Fimg.png)